Trade

Trading Type

Spot

Trade crypto freely

Alpha

Points

Get promising tokens in streamlined on-chain trading

Pre-Market

Trade new tokens before they are officially listed

Margin

Magnify your profit with leverage

Convert & Block Trading

0 Fees

Trade any size with no fees and no slippage

Leveraged Tokens

Get exposure to leveraged positions simply

Futures

Futures

Hundreds of contracts settled in USDT or BTC

Options

HOT

Trade European-style vanilla options

Unified Account

Maximize your capital efficiency

Demo Trading

Futures Kickoff

Get prepared for your futures trading

Futures Events

Participate in events to win generous rewards

Demo Trading

Use virtual funds to experience risk-free trading

Earn

Launch

CandyDrop

Collect candies to earn airdrops

Launchpool

Quick staking, earn potential new tokens

HODLer Airdrop

Hold GT and get massive airdrops for free

Launchpad

NEW

Be early to the next big token project

Alpha Points

NEW

Trade on-chain assets and enjoy airdrop rewards!

Futures Points

NEW

Earn futures points and claim airdrop rewards

Investment

Simple Earn

Earn interests with idle tokens

Auto-Invest

Auto-invest on a regular basis

Dual Investment

Buy low and sell high to take profits from price fluctuations

Soft Staking

Earn rewards with flexible staking

Crypto Loan

0 Fees

Pledge one crypto to borrow another

Lending Center

One-stop lending hub

VIP Wealth Hub

Customized wealth management empowers your assets growth

Private Wealth Management

Customized asset management to grow your digital assets

Quant Fund

Top asset management team helps you profit without hassle

Staking

Stake cryptos to earn in PoS products

BTC Staking

HOT

Stake BTC and earn 10% APR

GUSD Minting

Use USDT/USDC to mint GUSD for treasury-level yields

More

Promotions

Activity Center

Join activities and win big cash prizes and exclusive merch

Referral

20 USDT

Earn 40% commission or up to 500 USDT rewards

Announcements

Announcements of new listings, activities, upgrades, etc

Gate Blog

Crypto industry articles

VIP Services

Huge fee discounts

Proof of Reserves

Gate promises 100% proof of reserves

- Trending TopicsView More

53.48K Popularity

10.44K Popularity

7.36K Popularity

2.65K Popularity

2.49K Popularity

- Hot Gate FunView More

- MC:$3.59KHolders:20.00%

- MC:$3.57KHolders:10.00%

- MC:$3.6KHolders:20.04%

- MC:$3.62KHolders:20.04%

- MC:$3.55KHolders:10.00%

- Pin

ChatGPT Anniversary Post-Anniversary Talk: The Bottleneck of Generative AI and the Opportunity of Web3

Authors: @chenyangjamie, @GryphsisAcademy

TL; DR:

1. Why do generative AI and Web3 need each other?

2022 can be called the year when generative AI (Artificial Intelligence) took the world by storm, before which generative AI was only limited to the auxiliary tools of professional workers, and after the successive emergence of Dalle-2, Stable Diffusion, Imagen, and Midjourney, AI-Generated Content (abbreviation). As the latest technology application, AIGC has generated a large wave of trendy content on social media. And ChatGPT, which was released shortly after, was a bombshell, pushing this trend to its peak. As the first AI tool that can answer almost any question with the input of a simple text command (i.e., ), ChatGPT has long since become a daily work assistant for many people. For the first time, people can feel the “intelligence” of artificial intelligence, as it can handle a variety of daily tasks such as document writing, homework help, email assistant, essay revision, and even emotional tutoring, and the Internet is enthusiastically researching various mysteries used to optimize the results generated by ChatGPT. According to a report by Goldman Sachs’ macro team, generative AI can be a booster for labor productivity growth in the United States, driving global GDP growth by 7% (or nearly $7 trillion) and increasing productivity growth by 1.5 percentage points within 10 years of generative AI development.

** **

**

The Web3 field has also felt the spring breeze of AIGC, and the AI sector has risen across the board in January 2023

Source:

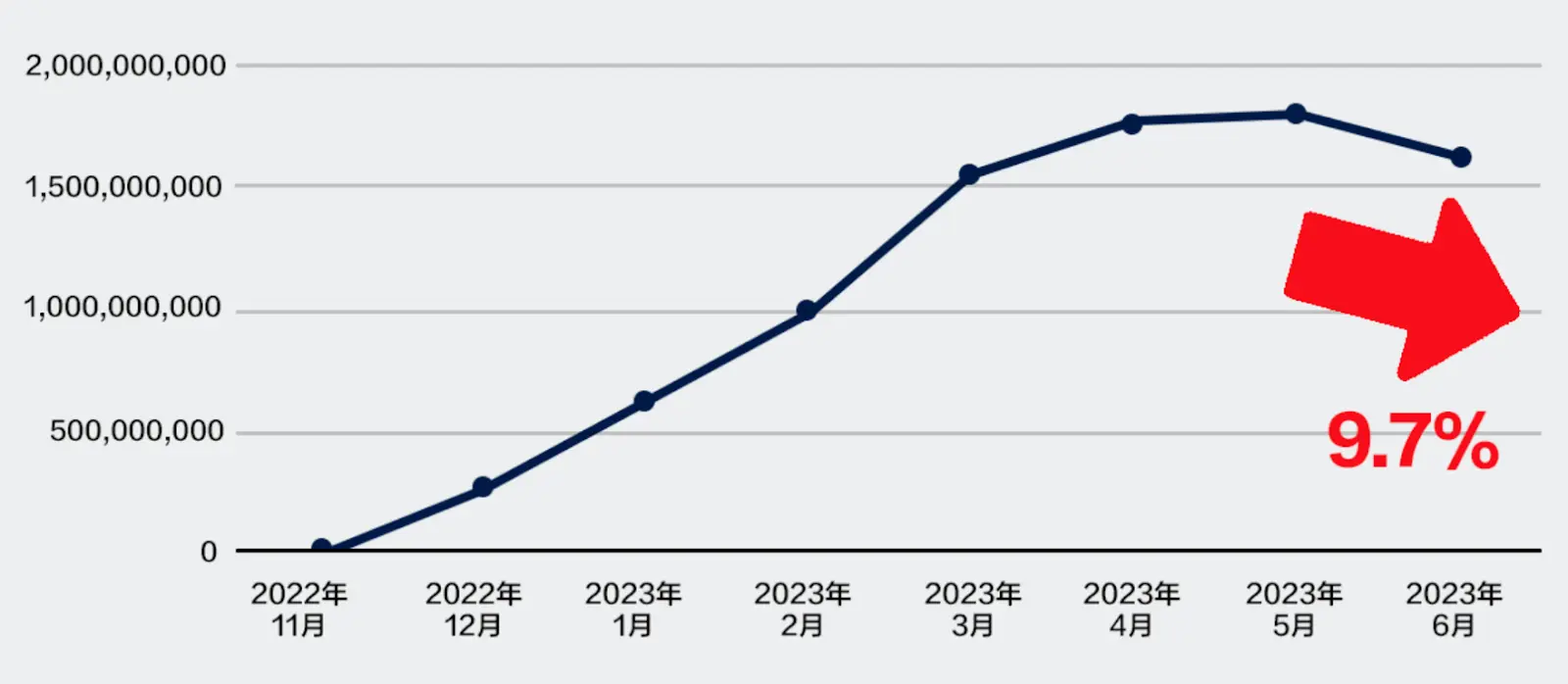

However, after the initial novelty faded, ChatGPT’s global traffic declined for the first time since its release in June 2023 (Source: SimilarWeb), and it’s time to rethink what generative AI means and what its limitations are. From the current situation, the dilemmas encountered by generative AI include (but are not limited to): first, social media is full of unlicensed and untraceable AIGC content, secondly, the high maintenance cost of ChatGPT has forced OpenAI to choose to reduce the quality of generation to reduce costs and increase efficiency, and finally, even the world’s largest models are still biased in some aspects of the generated results.

** **

**

ChatGPT global desktop and mobile traffic

Source: Similarweb

At the same time, Web3, which is gradually maturing, with its decentralized, fully transparent, and verifiable characteristics, provides a new solution to the current dilemma of generative AI:

** **

**

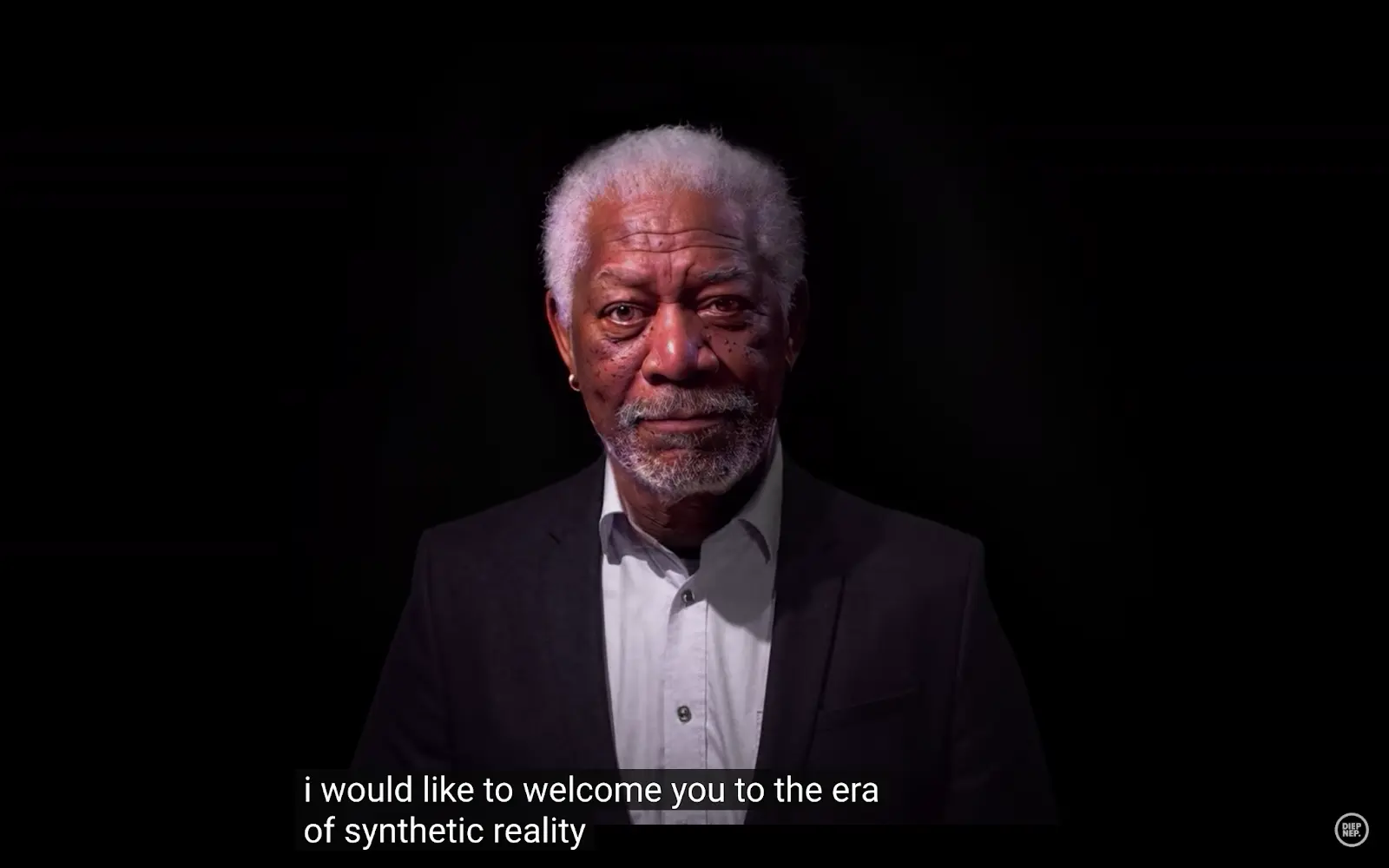

DeepFake Video: This is not Morgan Freeman

Source: Youtube

Even with the latest H100 to train GPT-3, the cost per FLOPs is still high

Source: substake.com

An AI designed to increase resolution would turn Obama into a white man

Source: Twitter

Automated on-chain analysis, monitoring on-chain information can obtain first-hand information

Source: nansen.ai

While generative AI and Web3 have their own challenges, their mutual needs and collaborative solutions will hopefully shape the future of the digital world. This collaboration will improve the quality and credibility of content creation, driving the further development of the digital ecosystem while providing users with a more valuable digital experience. The co-evolution of generative AI and Web3 will create an exciting new chapter in the digital age.

Second, a technical summary of generative AI

2.1 Technical Background of Generative AI

Since the concept of AI was introduced in the 50s of the 20th century, there have been several ups and downs, and each key technological innovation brings a new wave, and this time generative AI is no exception. As an emerging concept that has only been proposed in the past 10 years, generative AI has stood out from many research sub-directions of AI due to the dazzling performance of recent technologies and products, and has attracted the attention of the world overnight. Before we go any further into the technical architecture of generative AI, we need to first explain the specific meaning of generative AI discussed in this article, and briefly review the core technical components of generative AI, which has exploded recently.

Generative AI is a type of AI that can be used to create new content and ideas, including conversations, stories, images, videos, and music, and is a model built on a neural network framework based on deep learning, trained with large amounts of data, and packed with a huge number of parameters. The generative AI products that have recently come to people’s attention can be simply divided into two categories: one is image (video) generation products with text or style input, and the other is ChatGPT products with text input. These two types of products have the same core technology, that is, a pre-trained language model (LLM) based on the Transformer architecture. On this basis, the former type of product adds a diffusion model that combines text input to generate high-quality images or videos, and the latter type of product adds reinforcement learning from human feedback (RLHF) to achieve a logical level of output results close to that of humans.

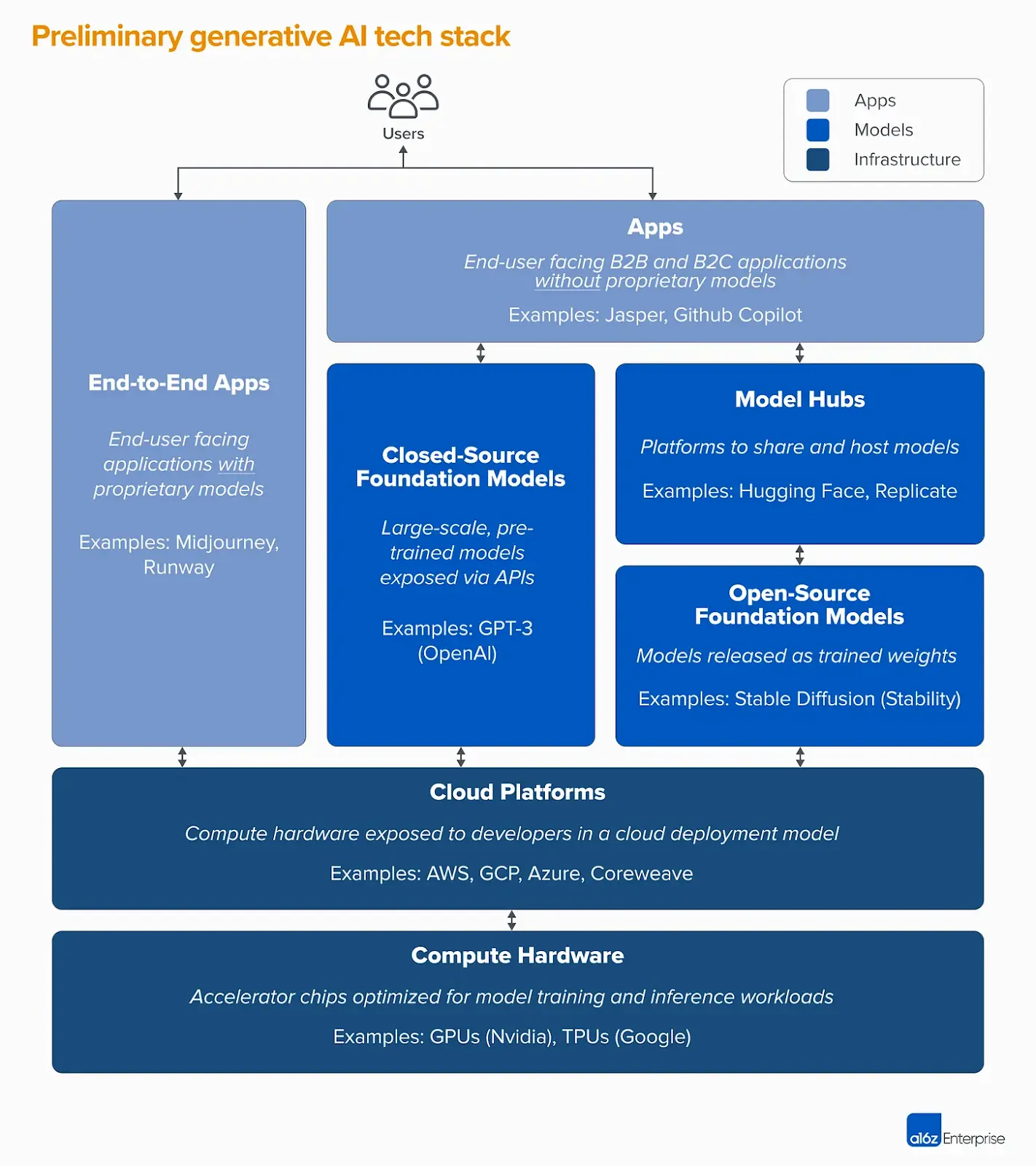

2.2 Current Technical Architecture of Generative AI:

Many of the best articles in the past have discussed the significance of generative AI to existing technical architectures from different perspectives, such as this article from A16z, “Who Owns the Generative AI Platform?”, which comprehensively summarizes the current technical architecture of generative AI:

** **

**

The main technical architecture of generative AI

Source: Who owns the generative AI platform?

In this research article, the current architecture of Web2 generative AI is divided into three levels: infrastructure (computing power), model, and application, and gives views on the current development of these three levels.

For infrastructure, although the logic of infrastructure construction in Web2 is still the mainstay, there are still very few infrastructure projects that truly combine Web3 and AI. At the same time, infrastructure is also the part that captures the most value at this stage, and Web2 tech oligarchs have made considerable gains by “selling shovels” in the current AI exploration stage by virtue of their decades of deep cultivation in the field of storage and computing.

For models, they are supposed to be the real creators and owners of AI, but at this stage, there are very few business models that can support the authors of the model to obtain the corresponding business value.

For applications, several verticals have accumulated more than hundreds of millions of dollars in revenue, but high maintenance costs and low user retention are not enough to support a long-term business model.

2.3 Examples of generative AI and Web3 applications

2.3.1 Applying AI to Analyze Web3’s Massive Data

**Data is at the heart of building technical barriers in the future of AI development. To understand why it’s important, let’s look at a study on the sources of large model performance. This study shows that large AI models exhibit a unique ability to emerge: by increasing the size of the model, the accuracy of the model will suddenly explode when a certain threshold is exceeded. As shown in the figure below, each graph represents a training task, and each line meets the performance (accuracy) of a large model. Experiments on different large models have reached the same conclusion: after the model size exceeds a certain threshold, the performance on different tasks shows breakthrough growth.

The relationship between model size and model performance

Source: Emergent Analogical Reasoning in Large Language Models

To put it simply, quantitative changes in the scale of the model lead to a qualitative change in the performance of the model. **The model size is related to the number of model parameters, the training time, and the quality of the training data. At this stage, in the case of the number of model parameters (major companies have top R&D teams responsible for design) and the training time (computing hardware is bought by NVIDIA) cannot close the gap, if you want to build a product that leads the competition, one way is to find the best demand pain points in the subdivision field to create a killer application, but this requires a deep understanding of the target field and excellent insight, while the other way is more practical and feasible, that is, to collect more and more comprehensive data than competitors. **

This also finds a good entry point for generative AI models to enter the Web3 space. The existing AI large models or basic models are trained based on huge amounts of data in different fields, and the uniqueness of on-chain data in Web3 makes the on-chain data model a feasible path worth looking forward to. There are currently two product logics for data hierarchies in Web3: the first is to provide incentives for data providers to protect the privacy and ownership of data owners while encouraging users to share the right to use the data with each other. Ocean Protocol provides a great way to share data. The second is to integrate data and applications by the project team to provide users with services for a certain task. **For example, Trusta Lab collects and analyzes users’ on-chain data, and can provide services such as witch account analysis and on-chain asset risk analysis through its unique MEDIA score scoring system.

2.3.2 AI Proxy Applications for Web3

**The aforementioned on-chain AI Agent application is also in the limelight - with the help of a large language model, it provides users with quantifiable on-chain services on the premise of ensuring user privacy. **According to a blog post from Lilian Weng, Head of AI Research at OpenAI, the AI Agent can be divided into four components, namely Agent = LLM + Planning + Memory + Tool use. As the core of the AI agent, LLM is responsible for interacting with the outside world, learning massive amounts of data and expressing it logically in natural language. The Planning + Memory part is similar to the concepts of action, policy and reward in training AlphaGo’s reinforcement learning technique. The task objective is disassembled into each small target, and the optimized solution of a task objective is learned step by step from the results and feedback of multiple repeated training, and the obtained information is stored in different types of memory for different functions. As for tool use, it refers to the use of tools such as invoking modular tools, retrieving Internet information, connecting to proprietary information sources or APIs, etc., and it is worth noting that most of this information will be difficult to change after pre-training.

Global diagram of the AI Agent

Source: LLM Powered Autonomous Agents

Combined with the specific implementation logic of AI Agent, we can boldly imagine that the combination of Web3 + AI Agent will bring infinite imagination, such as:

Although the current Web3 + AI Agent project center is still concentrated in the primary market or on the side of AI infrastructure, and there is still no killer application of To C, it is believed that the game-changing Web3 + AI projects in the future are worth looking forward to by combining various characteristics of the blockchain, such as distributed on-chain governance, zero-knowledge proof inference, model distribution, interpretability improvement, etc.

2.3.3 Potential vertical applications of Web3 + AI

A. Applications in the field of education

The combination of Web3 and AI has ushered in a revolution in education, where generative virtual reality classrooms are a compelling innovation. By embedding AI technology into an online learning platform, students can get a personalized learning experience that generates customized educational content based on their learning history and interests. This personalized approach is expected to increase students’ motivation and effectiveness in learning, bringing education closer to individual needs.

Students participate in virtual reality classes through immersive VR devices

Source: V-SENSE Team

In addition, the token model credit incentive is also an innovative practice in the field of education. Through blockchain technology, students’ credits and achievements can be encoded into tokens to form a digital credit system. Such incentives encourage students to actively participate in learning activities, creating a more participatory and motivating learning environment.

At the same time, inspired by the recently popular SocialFi project FriendTech, a similar key pricing logic bound to IDs can also be used to build a peer evaluation system, which also brings more social elements to education. With the help of the immutability of blockchain, the evaluation among students is more fair and transparent. This mutual evaluation mechanism not only helps to cultivate students’ teamwork and social skills, but also provides a more comprehensive and multi-angle assessment of students’ performance, introducing more diverse and comprehensive evaluation methods to the education system.

B. Medical applications

In healthcare, the combination of Web3 and AI is driving the development of federated learning and distributed inference. By federating distributed computing and machine learning, healthcare professionals can share data on a large scale for deeper, more comprehensive group learning. This collective intelligence approach can accelerate the development of disease diagnosis and treatment options, and advance the field of medicine.

Privacy protection is a key issue that cannot be ignored in medical applications. Through the decentralization of Web3 and the immutability of blockchain, patients’ medical data can be stored and transmitted more securely. Smart contracts can achieve precise control and permission management of medical data, ensuring that only authorized personnel can access patients’ sensitive information, thereby maintaining the privacy of medical data.

C. Applications in the field of insurance

In the insurance sector, the integration of Web3 and AI is expected to bring more efficient and intelligent solutions to traditional businesses. For example, in auto and home insurance, the use of computer vision technology enables insurers to more efficiently assess the value and risk level of property through image analysis and valuation. This provides insurance companies with more refined and personalized pricing strategies, and improves the level of risk management in the insurance industry.

Use AI technology for claims valuation

Source: Tractable Inc

At the same time, on-chain automated claims settlement is also an innovation in the insurance industry. Based on smart contracts and blockchain technology, the claims process can be more transparent and efficient, reducing the possibility of cumbersome procedures and human intervention. This not only increases the speed of claims settlement, but also reduces operational costs, resulting in a better experience for insurers and customers.

Dynamic premium adjustment is another innovative practice, through real-time data analysis and machine learning algorithms, insurance companies are able to adjust premiums more accurately and in a timely manner, and personalize pricing according to the actual risk profile of the insured. This not only makes premiums more equitable, but also incentivizes insured people to adopt healthier and safer behaviors, promoting risk management and preventive measures for society as a whole.

D. Applications in the field of copyright

In the field of copyright, the combination of Web3 and AI has brought a whole new paradigm to digital content creation, curation proposals, and code development. Through smart contracts and decentralized storage, copyright information for digital content can be better protected, and creators of works can more easily track and manage their intellectual property. At the same time, through blockchain technology, transparent and tamper-proof creative records can be established, providing a more reliable means for the traceability and authentication of works.

The innovation of the working model is also an important change in the field of copyright. Token-incentivized work collaboration encourages creators, planners, and developers to participate in the project by combining work contributions with token incentives. This not only promotes collaboration between creative teams, but also provides participants with the opportunity to directly benefit from the success of the project, leading to more great work.

On the other hand, the application of the token as proof of copyright reshapes the model of benefit distribution. Through the dividend mechanism automatically executed by smart contracts, each participant in the work can get the corresponding profit share in real time when the work is used, sold or transferred. This decentralized dividend model effectively solves the problems of opacity and lag in the traditional copyright model, and provides a fairer and more efficient benefit distribution mechanism for creators.

E. Metaverse applications

In the field of the metaverse, the integration of Web3 and AI provides new possibilities for creating low-cost AIGC-filled chain game content. The virtual environment and characters generated by AI algorithms can enrich the content of the chain game, provide users with a more vivid and diverse game experience, and reduce the manpower and time cost in the production process.

Digital human production is an innovation in metaverse applications. Combined with appearance generation down to the hair and thinking construction based on large language models, the generated digital humans can play various roles in the metaverse, interact with users, and even participate in digital twins of real-world scenarios. This provides a more realistic and profound experience for the development of virtual reality, and promotes the wide application of digital virtual human technology in entertainment, education, and other fields.

Automatically generate advertising content according to on-chain user portraitsIt is an intelligent advertising creative application in the metaverse field. By analyzing users’ behavior and preferences in the metaverse, AI algorithms can generate more personalized and engaging ad content, improving click-through rates and user engagement for ads. This method of ad generation is not only more in line with the interests of users, but also provides advertisers with a more efficient way to promote.

Generative interactive NFTs are a compelling technology in the metaverse space. By combining NFTs with generative design, users can participate in the creation of their own NFT artwork in the metaverse, giving it interactivity and uniqueness. This opens up new possibilities for the creation and trading of digital assets, driving the development of digital art and the virtual economy in the metaverse.

III. Web3 Related Targets

Here the author has selected five projects, Render Network and Akash Network as the veteran leaders of the general AI infrastructure and AI track, Bittensor as the popular project in the model category, Alethea.ai as the strong application project of generative AI, Fetch.ai as the landmark project in the field of AI agency, to get a glimpse of the current status of generative AI projects in the field of Web3.

3.1 Render Network($RNDR)

Render Network was founded in 2017 by Jules Urbach, the founder of its parent company, OTOY. OTOY’s core business is graphics rendering on the cloud, and it has worked on Oscar-winning film and television projects, with Google and Mozilla co-founders as advisors, and has worked on several projects with Apple. The purpose of Render Network, which extends from OTOY to the Web3 field, is to use the distributed nature of blockchain technology to connect smaller-scale rendering and AI demand and resources to a decentralized platform, thereby saving small workshops the cost of leasing expensive centralized computing resources (such as AWS, MS Azure and Alibaba Cloud), and also providing income generation for those with idle computing resources.

Because Render is an OTOY company that independently developed the high-performance renderer Octane Render, coupled with a definite business logic, it was considered a Web3 project with its own needs and fundamentals at the beginning of its launch. During the period when generative AI was all the rage, the demand for distributed verification and distributed inference tasks was a perfect fit for Render’s technical architecture, and it was considered one of its promising development directions in the future. At the same time, Render has occupied the leading position in the AI track in the Web3 field for many years in recent years, and has derived a certain degree of meme nature.

In February 2023, Render Network announced an upcoming update to the new pricing tiers and a community-voted $RNDR price stabilization mechanism (however, it has not yet been confirmed when it will go live), and at the same time announced that the project will be transferred from Polygon to Solana (along with the upgrade of $RNDR tokens to $RENDER tokens based on the Solana SPL standard, which has already been completed in November 2023).

The new pricing grading system released by Render Network divides on-chain services into three tiers, from high to low, corresponding to different price points and quality of rendering services, which can be selected by the rendering demander.

Three tiers of Render Network’s new pricing tier

The price stabilization mechanism of $RNDR, which was voted by the community, has been changed from the previous irregular repurchase to the use of the “Burn-and-Mint Equilibrium (BME)” model, making the positioning of $RNDR as a price stability payment token rather than holding assets for a long time more obvious. The specific business process in a BME Epoch is shown in the following diagram:

Note: Render Network collects 5% of the fees paid by the purchaser of the product from each transaction for the operation of the project.

Burn-and-Mint Equilibrium Epoch

Credit to Petar Atanasovski

Source: Medium

According to the preset rules, in each epoch executed by the BME, a preset number of new tokens will be minted (the preset number will gradually decrease over time). The newly minted tokens will be distributed to the three parties:

Product Creator. The creator of the product obtains in two ways:

Rewards for completing missions. It is easy to understand that each product node is rewarded according to the number of rendering tasks completed.

Online Rewards. Rewards will be given according to the online standby market of each product node, and more online work will be encouraged to limit resources.

Product Purchasers. Similar to the mall product rebate, purchasers can get up to 100% of the $RNDR token rebate to encourage continued use of the Render Network in the future.

DEX (Decentralized Exchange) liquidity provider. Liquidity providers in cooperative DEXs can be rewarded according to the amount of $RNDR staked by ensuring that they can buy a sufficient amount of $RNDR at a reasonable price when they need to burn $RNDR.

** **

**

Source: coingecko.com

From the price trend of $RNDR in the past year, it can be seen that as the leading project of the AI track in Web3 for many years, $RNDR has eaten the dividends of a wave of AI boom driven by ChatGPT in late 2022 and early 2023, and at the same time, with the release of the new token mechanism, the price of $RNDR has reached a high point in the first half of 2023. After a sideways second half of the year, the price of $RNDR has reached a high point in recent years with the recovery of AI brought about by OpenAI’s new press conference, the migration of Render Network to Solana, and the imminent implementation of a new token mechanism. Since the fundamental changes of $RNDR are minimal, for investors, the future investment of $RNDR needs to be more prudent in position management and risk management.

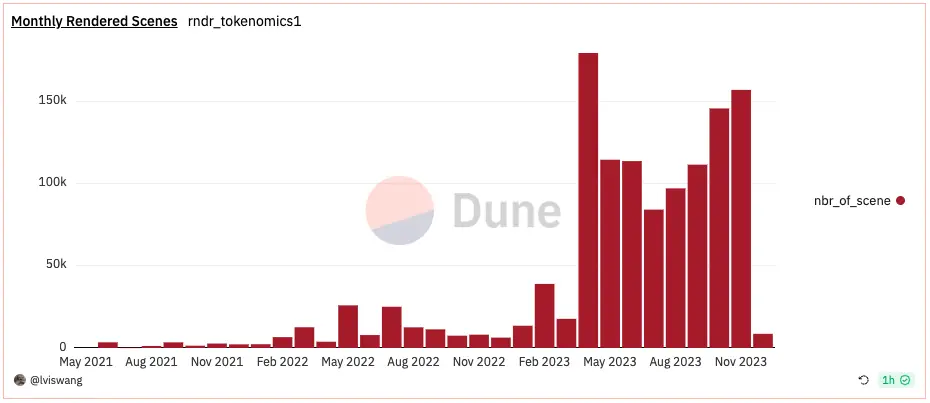

The number of Render Network nodes per month

** **

**

Render Network The number of scenes rendered per month

Source: Dune.com

At the same time, as you can see from the Dune dashboard, the total number of rendering jobs has increased since the beginning of 2023, but the number of rendering nodes has not increased. Combined with the generative AI boom at the end of 2022, it is reasonable to infer that the additional rendering tasks are all generative AI-related tasks. At present, it is difficult to say whether this part of the demand is a long-term demand, and it needs to be followed up to observe.

3.2 Akash Network ($AKT)

Akash Network is a decentralized cloud computing platform that aims to provide developers and enterprises with a more flexible, efficient, and cost-effective cloud computing solution. The “super cloud” platform built by the project is built on distributed blockchain technology, which leverages the decentralized nature of blockchain to provide users with a decentralized cloud infrastructure that can deploy and run applications on a global scale, including diverse computing resources including CPUs, GPUs, and storage.

Akash Network’s founders, Greg Osuri and Adam Bozanich, are serial entrepreneurs who have worked together for many years, each with years of project experience, having co-founded the Overclock Labs project, which is still a core participant in Akash Network. The founding team had a clear vision of Akash Network’s primary mission, which was to reduce cloud computing costs, increase availability, and increase user control over computing resources. Through open bidding, which incentivizes resource providers to open up idle computing resources in their networks, Akash Network enables more efficient use of resources, thereby providing more competitive prices for resource demanders.

Akash Network started the Akash Network Economics 2.0 update program in January 2023, with the goal of addressing many of the shortcomings of the current token economy, including:

According to the information provided on the official website, the solutions proposed by the Akash Network Economics 2.0 plan include the introduction of stablecoin payments, the addition of maker order and egg eating fees to increase protocol revenue, the increase of incentives for resource providers and the increase in the amount of community incentives, etc., among which the stablecoin payment function and maker taker fee function have been launched and implemented.

As the native token of Akash Network, $AKT has a variety of uses in the protocol, including staking verification (security), incentives, network governance, and payment of transaction fees. According to the data provided on the official website, the total supply of $AKT is 388M, and as of November 2023, 229M has been unlocked so far, accounting for about 59%. The founding tokens distributed at the launch of the project have been fully unlocked in March 2023 and will enter the secondary market circulation. The distribution ratio of genesis tokens is as follows:

Notably, in terms of value capture, one feature that $AKT proposes to implement that has not yet been implemented but is mentioned in the whitepaper is that Akash plans to charge a “charge fee” for each successful lease. Subsequently, it sends these fees to the Take Income Pool so that they can be distributed to holders. The program provides for a 10% fee for $AKT transactions and a 20% fee for transactions using other cryptocurrencies. In addition, Akash also plans to reward holders who lock up their $AKT holdings for a longer period of time. As a result, investors who hold for a longer period of time will be eligible for more generous rewards. If this project is successfully launched in the future, it will definitely become a major driving force for the price of the currency, and it will also help to better estimate the value of the project.

** **

**

Source: coingecko.com

As can be seen from the price trend shown on coingecko.com, the price of $AKT also ushered in an increase in mid-August and late November 2023, respectively, but it is still not as good as the same period increase of other projects in the AI track, which may be related to the current capital sentiment tendency. Overall, Akash’s project, as one of several high-quality projects in the AI track, has better fundamentals than most of the competitors in the AI track. With the development of the AI industry and the intensification of cloud computing resources, it is believed that Akash Network will be able to soar in the next wave of AI in the future.

3.3 Bittensor ($TAO)

If the reader is familiar with the technical architecture of $BTC, it will be very easy to understand the design of Bittensor. In fact, when designing Bittensor, its authors borrowed a lot of the characteristics of the $BTC crypto veteran, including: a total of 21 million tokens, a halving of production about every four years, a consensus mechanism involving PoW, and so on. Specifically, let’s imagine an initial BTC production process, and then replace the process of “mining” to calculate random numbers that cannot create real value with training and validating AI models, and incentivizing miners to work based on the performance and reliability of the AI models, which is a simple summary of Bittensor’s ($TAO) project architecture.

The Bittensor project was first founded in 2019 by two AI researchers, Jacob Steeves and Ala Shaabana, and its main framework is based on the content of a white paper written by a mysterious author, Yuma Rao. In summary, it designs a permissionless open-source protocol and builds a network architecture composed of many subnets connected by different subnets responsible for different tasks (machine translation, image recognition and generation, large language models, etc.), and excellent task completion will be incentivized, while allowing subnets to interact and learn from each other.

Looking back at the large AI models currently on the market, without exception, they all come from the huge amount of computing resources and data invested by technology giants. While it’s true that AI products trained in this way perform impressively, they also come with a high risk of concentration becoming evil. The Bittensor infrastructure is designed to allow a network of communicating experts to communicate and learn from each other, which lays the foundation for decentralized training of large models. Bittensor’s long-term vision is to compete with the closed-source models of giants such as OpenAI, Meta, Google, etc., in order to achieve matching inference performance while maintaining the decentralized nature of the model.

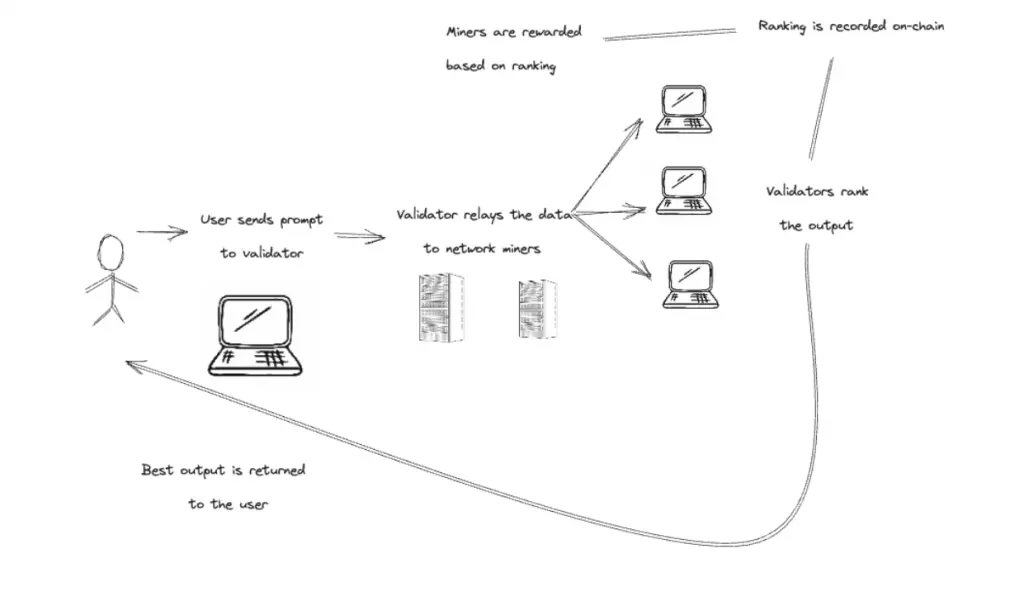

The technical core of the Bittensor network comes from Yuma Rao’s uniquely designed consensus mechanism, also known as Yuma consensus, which is a consensus mechanism that mixes PoW and PoS. The main participants on the supply side are divided into “servers” (i.e., miners) and “validators”, and the participants on the demand side are “clients” (i.e., customers) who use the models in the network. Miners are responsible for providing pre-trained models for the current subnet task, and the incentives received depend on the quality of the provided models, while validators are responsible for verifying model performance and acting as intermediaries before miners and customers. The specific process is as follows:

** **

**

It should be noted that in the vast majority of subnets, Bittensor itself does not train any models, and its role is more like linking model providers and model demanders, and on this basis, it further uses the interaction between small models to improve performance in different tasks. At present, there are 30 subnets that have been online (or have been online), corresponding to different task models.

As Bittensor’s native token, $TAO plays a pivotal role in the ecosystem by creating subnets, registering in subnets, paying for services, staking validators, and more. At the same time, due to the Bittensor project’s practice of paying tribute to the spirit of BTC, $TAO chose a fair start, that is, all tokens will be generated by contributing to the network. Currently, the daily output of $TAO is around 7200, which is divided equally between miners and validators. Since the launch of the project, about 26.3% of the total amount of 21 million has been generated, of which 87.21% of the tokens have been used for staking and verification. At the same time, the project is designed to halve production (the same as the BTC) every 4 years, the most recent of which will occur on September 20, 2025, which will also be a big driver of price increases.

Credit: taostats.io

** **

**

From the price trend, we can see that the price of $TAO has experienced a sharp increase since the end of October 2023, and it is speculated that the main driving force is a new round of AI boom brought about by OpenAI’s press conference, which has made the capital sector rotate to the AI sector. At the same time, $TAO As an emerging project in the Web3 + AI track, its excellent project quality and long-term project vision are also a major reason to attract funds. However, we have to admit that, like other AI projects, although the combination of Web3 + AI has great potential, its application in actual business is not enough to support a long-term profitable project.

3.4 Alethea.ai($ALI)

Founded in 2020, Alethea.ai is a project dedicated to bringing decentralized ownership and decentralized governance to generative content using blockchain technology. The founders of Alethea.ai believe that generative AI will put us in an era where generative content leads to information redundancy, where large amounts of electronic content are simply copied and pasted or generated with one click, and the people who created the value in the first place will not be able to benefit from it. By connecting on-chain primitives (such as NFTs) with generative AI, ownership of generative AI and its content can be ensured, and community governance can be carried out on this basis.

Driven by this philosophy, early Alethea.ai introduced a new NFT standard, iNFT, that leverages the Intelligence Pod to create embedded AI animations, speech synthesis, and even generative AI into images. In addition Alethea.ai partnered with artists to make their artwork into iNFTs, which fetched $478,000 at Sotheby’s.

Inject soul into NFTs

Source: Alethea.ai

Later Alethea.ai launched the AI Protocol, which allows any developer and creator of generative AI to create with the iNFT standard permissionlessly. At the same time, in order to make a sample for other projects on its own AI Protocol, Alethea.ai also borrowed the theory of GPT’s large model to launch CharacterGPT, a tool for making interactive NFTs. Furthermore, Alethea.ai also recently released Open Fusion, which allows any ERC-721 NFT on the market to be combined with an Intelligence and released to the AI Protocol.

Alethea.ai’s native token is $ALI, and its main uses are fourfold:

Source: coingecko.com

As can be seen from the use case of $ALI, the current value capture of this token is still at the narrative level, and this inference can also be confirmed from the change in the price of the currency within a year: $ALI has reaped the dividends of the generative AI boom led by ChatGPT since December 2022. At the same time, in June of this year, when Alethea.ai announced the launch of its latest Open Fusion feature, it also brought a wave of growth. On top of that, the price of $ALI has been on a downward trend, and even the AI boom in late 2023 has failed to drive the price up to the average level of projects in the same track.

In addition to the native token, let’s take a look at the performance of NFT projects, Alethea.ai’s iNFTs (including officially released collections) on the NFT market.

Daily sales of Intelligence Pods on Opensea

** **

**

Daily sales of Revenants Collection on Opensea

Source: Dune.com

From Dune’s dashboard statistics, we can see that both the Intelligence Pod, which was sold to a third party, and the Revenants collection, which was released by the Alethea.ai first party, gradually disappeared after some time after the initial release. The main reason for this, the author thinks, should be after the initial novelty fades, there is no actual value or community popularity to retain users.

3.5 Fetch.ai($FET)

Fetch.ai is a project dedicated to promoting the convergence of artificial intelligence and blockchain technology. The company’s goal is to build a decentralized, intelligent economy that powers economic activity between intelligent agents through a combination of machine learning, blockchain, and distributed ledger technology.

Fetch.ai was founded in 2019 by scientists from the United Kingdom, Humayun Sheikh, Toby Simpson, and Thomas Hain. The three founders come from a wide range of backgrounds, including Humayun Sheikh as an early investor in Deepmind, Toby Simpson as an executive at several companies, and Thomas Hain as a professor of artificial intelligence at the University of Sheffield. Fetch.ai The founding team’s deep background has brought rich industry resources to the company, covering traditional IT companies, blockchain star projects, medical and supercomputing projects, and other fields.

Fetch.ai’s mission is to build a decentralized web platform consisting of autonomous economic agents and AI applications, enabling developers to accomplish preset target tasks by creating autonomous agents. The core technology of the platform is its unique three-tier architecture:

Building on this architecture, Fetch.ai has also launched several follow-up products and services, such as Co-Learn (a shared machine learning model between agents) and Metaverse (a smart agent cloud hosting service) to enable users to develop their own smart agents on its platform.

In terms of tokens, $FET, as the native token of Fetch.ai, covers the regular role of paying gas, staking verification, and purchasing services within the network. More than 90% of the tokens have been unlocked so far by $FET, which is distributed as follows:

Since the launch of the project, Fetch.ai has received multiple rounds of funding in the form of diluted token holdings, most recently on March 29, 2023, when Fetch.ai received $30 million in funding from DWF Lab. Since the $FET token does not capture the value of the project in terms of revenue, the driving force for the price increase is mainly from the project update and the market’s sentiment towards the AI track. It can be seen that the price of Fetch.ai has soared by more than 100% at the beginning of 2023 and at the end of 2023.

Source: coingecko.com

Compared with other ways for blockchain projects to develop and gain attention, Fetch.ai’s development path is more like an AI startup project in Web2.0, focusing on polishing the technical level, making a name for itself and finding profit points through continuous financing and extensive cooperation. This approach leaves a lot of room for future applications to be developed based on Fetch.ai, but the development model also makes it less attractive to other blockchain projects to activate the ecosystem (one of the founders of Fetch.ai personally founded the Fetch.ai-based DEX project Mettalex DEX, which eventually failed). As an infrastructure-oriented project, it is difficult to improve the intrinsic value of the Fetch.ai project due to the withering of the ecology.

Fourth, generative AI has a promising future

Nvidia CEO Jensen Huang calls the release of generative models the “iPhone” moment of AI, and the scarce resource for producing AI at this stage is the infrastructure centered on high-performance computing chips. As the AI sub-track that locks in the most funds in Web3, AI infrastructure projects have always been the focus of investors’ long-term investment and research. It is foreseeable that with the gradual upgrading of computing power equipment by chip giants, the gradual improvement of AI computing power, and the unlocking of AI capabilities, it is foreseeable that more AI infrastructure projects in subdivided fields in Web3 will emerge in the future, and it can even be expected that chips specially designed and produced for AI training in Web3 will come out in the future. **

Although the development of ToC’s generative AI products is still in the experimental stage, some of its ToB industrial-grade products have shown great potential. One of them is the “digital twin” technology that migrates real-world scenarios to the digital realm, combined with the digital twin scientific computing platform released by NVIDIA for the metaverse vision, considering that there is still a huge amount of data value in the industry that has not yet been released, generative AI will become an important help for digital twins in industrial scenarios. Moving further into the Web3 field, including the metaverse, digital content creation, real world assets, etc., will be affected by AI-powered digital twin technology.

The development of new interactive hardware is also a link that cannot be ignored. Historically, every hardware innovation in the computer world has brought about a seismic change and new development opportunities, such as the computer mouse that is commonplace today, or the iPhone 4 with a multi-touch capacitive screen. The Apple Vision Pro, which has been announced to be launched in the first quarter of 2024, has already attracted a lot of attention around the world with its stunning demo, which should bring unexpected changes and opportunities to various industries when it is actually launched. With the advantages of rapid content production, fast dissemination and wide range, the entertainment field is often the first to benefit after each hardware technology update. Of course, this also includes various visual entertainment tracks such as the metaverse, chain games, and NFTs in Web3, which are worthy of readers’ long-term attention and research in the future.

In the long run, the development of generative AI is a process of quantitative change leading to qualitative change. The essence of ChatGPT is a solution to the problem of reasoning Q&A, which is a problem that has been widely watched and studied in academia for a long time. After long-term iteration of data and models, it finally reached the level of GPT-4, which amazed the world. The same is true for AI applications in Web3, which are still in the stage of introducing models from Web2 into Web3, and models developed entirely based on Web3 data have not yet emerged. In the future, far-sighted project parties and a lot of resources will need to be invested in the research of practical problems in Web3, so that Web3’s own ChatGPT-level killer app can gradually get closer.

At this stage, there are many directions worth exploring under the technical background of generative AI, one of which is the Chain-of-Thought technology that the implementation of logic depends on. To put it simply, through the chain of thought technology, large language models have been able to make a qualitative leap in multi-step reasoning. However, the use of the chain of thought has not been solved, or to some extent, it has led to the problem of insufficient reasoning ability of large models in complex logic. Readers who are interested in this aspect should read the paper of the original author of the chain of thought.

The success of ChatGPT has led to the emergence of various popular GPT chains in Web3, but the simple and crude combination of GPT and smart contracts cannot really solve the needs of users. It has been about a year since the release of ChatGPT, and in the long run, it is just a flick of the fingers, and future products should also start from the real needs of Web3 users themselves, and with the increasingly mature Web3 technology, I believe that the application of generative AI in Web3 has infinite possibilities worth looking forward to.

References

Google Cloud Tech - Introduction to Generative AI

AWS - What is Generative AI

The Economics of Large Language Models

As soon as the Diffusion Model is forced, the GAN becomes obsolete???

Illustrating Reinforcement Learning from Human Feedback (RLHF)

Generative AI and Web3

Who Owns the Generative AI Platform?

Apple Vision Pro Full Moon Rethinks: XR, RNDR, and the Future of Spatial Computing

How is AI minted as an NFT?

Emergent Analogical Reasoning in Large Language Models

Akash Network Token (AKT) Genesis Unlock Schedule and Supply Estimates